Model Gallery

Discover and install AI models from our curated collection

Find Your Perfect Model

Filter by Model Type

Browse by Tags

mistral-nemo-instruct-2407-12b-thinking-m-claude-opus-high-reasoning-i1

The model described in this repository is the **Mistral-Nemo-Instruct-2407-12B** (12 billion parameters), a large language model optimized for instruction tuning and high-level reasoning tasks. It is a **quantized version** of the original model, compressed for efficiency while retaining key capabilities. The model is designed to generate human-like text, perform complex reasoning, and support multi-modal tasks, making it suitable for applications requiring strong language understanding and output.

Repository: localai

meta-llama-3.1-8b-claude-imat

Meta-Llama-3.1-8B-Claude-iMat-GGUF: Quantized from Meta-Llama-3.1-8B-Claude fp16. Weighted quantizations were creating using fp16 GGUF and groups_merged.txt in 88 chunks and n_ctx=512. Static fp16 will also be included in repo. For a brief rundown of iMatrix quant performance, please see this PR. All quants are verified working prior to uploading to repo for your safety and convenience.

Repository: localaiLicense: llama3.1

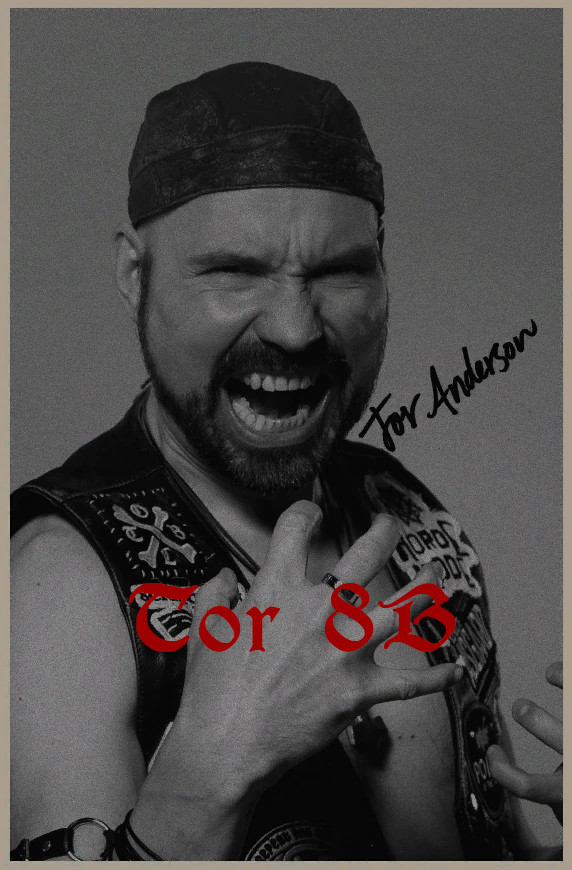

tor-8b

An earlier checkpoint of Darkens-8B using the same configuration that i felt was different enough from it's 4 epoch cousin to release, Finetuned ontop of the Prune/Distill NeMo 8B done by Nvidia, This model aims to have generally good prose and writing while not falling into claude-isms.

Repository: localaiLicense: apache-2.0

darkens-8b

This is the fully cooked, 4 epoch version of Tor-8B, this is an experimental version, despite being trained for 4 epochs, the model feels fresh and new and is not overfit, This model aims to have generally good prose and writing while not falling into claude-isms, it follows the actions "dialogue" format heavily.

Repository: localaiLicense: apache-2.0

gpt-oss-20b-claude-4-distill-i1

**Model Name:** GPT-OSS 20B

**Base Model:** openai/gpt-oss-20b

**License:** Apache 2.0 (fully open for commercial and research use)

**Architecture:** 21B-parameter Mixture-of-Experts (MoE) language model

**Key Features:**

- Designed for powerful reasoning, agentic tasks, and developer applications.

- Supports configurable reasoning levels (Low, Medium, High) for balancing speed and depth.

- Native support for tool use: web browsing, code execution, function calling, and structured outputs.

- Trained on OpenAI’s **harmony response format** — requires this format for proper inference.

- Optimized for efficient inference with native **MXFP4 quantization** (supports 16GB VRAM deployment).

- Fully fine-tunable and compatible with major frameworks: Transformers, vLLM, Ollama, LM Studio, and more.

**Use Cases:**

Ideal for research, local deployment, agent development, code generation, complex reasoning, and interactive applications.

**Original Model:** [openai/gpt-oss-20b](https://huggingface.co/openai/gpt-oss-20b)

*Note: This repository contains quantized versions (GGUF) by mradermacher, based on the original fine-tuned model from armand0e, which was derived from unsloth/gpt-oss-20b-unsloth-bnb-4bit.*

Repository: localaiLicense: apache-2.0